The first major legal challenge to police use of automated facial recognition surveillance begins in Cardiff later.

Ed Bridges, whose image was taken while he was shopping, says weak regulation means AFR breaches human rights.

The civil rights group Liberty says current use of the tool is equivalent to the unregulated taking of DNA or fingerprints without consent.

South Wales Police defends the tool but has not commented on the case.

In December 2017, Mr Bridges was having a perfectly normal day.

“I popped out of the office to do a bit of Christmas shopping and on the main pedestrian shopping street in Cardiff, there was a police van,” he told BBC News.

“By the time I was close enough to see the words ‘automatic facial recognition’ on the van, I had already had my data captured by it.

“That struck me as quite a fundamental invasion of my privacy.”

The case could provide crucial guidance on the lawful use of facial technology.

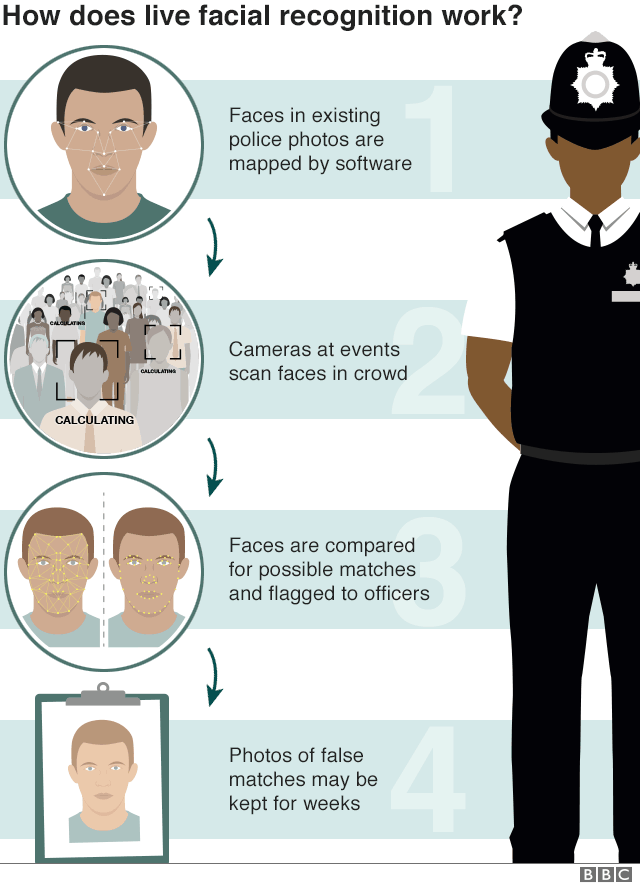

It is a far more powerful policing tool than traditional CCTV – as the cameras take a biometric map, creating a numerical code of the faces of each person who passes the camera.

These biometric maps are uniquely identifiable to the individual.

“It is just like taking people’s DNA or fingerprints, without their knowledge or their consent,” said Megan Goulding, a lawyer from the civil liberties group Liberty which is supporting Mr Bridges.

However, unlike DNA or fingerprints, there is no specific regulation governing how police use facial recognition or manage the data gathered.

Liberty argues that even if there were regulations, facial recognition breaches human rights and should not be used.

The tool allows the facial images of vast numbers of people to be scanned in public places such as streets, shopping centres, football crowds and music events.

The captured images are then compared with images on police “watch lists” to see if they match.

“If there are hundreds of people walking the streets who should be in prison because there are outstanding warrants for their arrest, or dangerous criminals bent on harming others in public places, the proper use of AFR has a vital policing role,” said Chris Phillips, former head of the National Counter Terrorism Security Office.

“The police need guidance to ensure this vital anti-crime tool is used lawfully.”

Facial recognition’s usefulness for spotting, for example, terrorist suspects and preventing atrocities is clear but Liberty says the technology is being used for much more mundane policing, such as catching pickpockets.

Liberty also says:

- images of people on watch lists can come from anywhere

- police have not ruled out taking watch list images from social media

- some lists include people not wanted for any crime

- AFR has been used to look for people with mental health conditions

Mr Bridges had his image captured by facial recognition for a second time at a peaceful protest against the arms trade.

His legal challenge argues the use of the tool breached his human right to privacy as well as data protection and equality laws.

Three UK police forces have used facial recognition in public spaces since June 2015:

- South Wales Police

- Metropolitan Police

- Leicestershire Police

Liberty believes South Wales Police has used facial recognition the most of the three forces, at about 50 deployments, including during the policing of the Champions League final in Cardiff in June 2017, where it emerged that, of the 2,470 potential matches made, 92% (2,297) were wrong.

South Wales Police has gone to considerable lengths to explain its use of facial recognition and last year described it as “lawful and proportionate”.

‘Misidentifying minorities’

When the technology was tested recently in London, one man was fined for refusing to have his image captured.

BBC News also reported that at least three chances to assess how well the systems dealt with ethnicity had been missed by police over five years.

Civil liberties groups say studies have shown facial recognition discriminates against women and those from ethnic minorities, because it disproportionately misidentifies those people.

“If you are a woman or from an ethnic minority and you walk past the camera, you are more likely to be identified as someone on a watch list, even if you are not,” said Ms Goulding.

“That means you are more likely to be stopped and interrogated by the police.

“This is another tool by which social bias will be entrenched and communities who are already over-policed simply get over-policed further.”

Liberty says the risk of false-positive matches of women and ethnic minorities has the potential to change the nature of public spaces.

Last week San Francisco became the first US city to ban the use of the technology, following fears about its reliability and infringement of people’s liberty and privacy.

The information commissioner and the surveillance camera commissioner have both become involved in Mr Bridges’s case, as has the Home Office, indicating the high level of interest and concern about the parameters within which facial recognition can lawfully operate.

The case is expected to last three days, with judgment reserved to a later time.

Source: bbc.co.uk –

Be the first to comment